Robot Learning: Goal-Condition Panning

Автор: Montreal Robotics

Загружено: 2025-03-24

Просмотров: 252

Many recent foundational model papers use goal conditioning and hierarchical planning. What are the best methods for training these models? What goal distribution is best for training? How can we think about the generalization that will enable better model reuse? In this lecture, I connect these concepts to foundational models to get more detailed background.

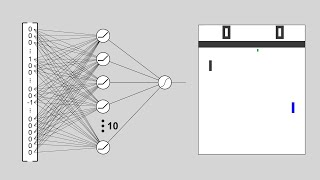

I talk about how traditional RL can struggle with long trajectories and complex tasks, like cooking soup, where you have many smaller, repetitive actions. I suggested that instead of training separate policies for each action, we could use a single policy conditioned on a goal. Initially, we looked at using one-hot encoded vectors as context, but that doesn't scale well and can't handle combinations of tasks. Then, we discussed using a continuous representation of the goal, which is more efficient and flexible. This approach, where the goal is represented in the same space as the state, allows the agent to learn a single policy that can achieve a wide range of goals. We also touched on how this goal-conditioned approach helps with reusability and robustness, since the policy learns to handle variations within the task.

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: