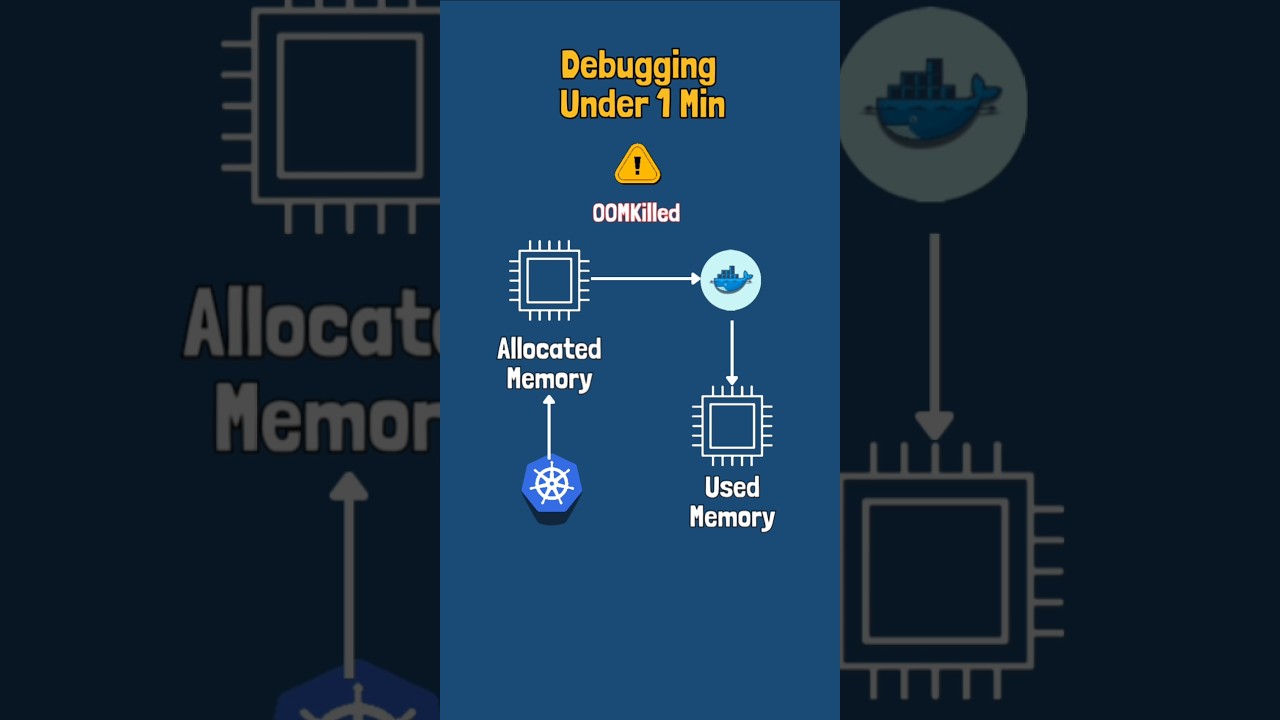

Fixing Kubernetes OOMKilled for a Python App Pod by Increasing Memory Limits

Автор: TechWorld With Sahana

Загружено: 2025-08-09

Просмотров: 1425

Possible RCA for Python pod repeatedly getting OOMKilled

1. Memory leaks in Python code — objects not released, references kept alive.

➡ Check with tracemalloc or objgraph; fix code to free unused memory.

2. Large in-memory data processing — huge datasets/files loaded fully into RAM.

➡ Stream or chunk data; process in batches instead of all at once.

3. Inefficient library usage — libraries like pandas, NumPy, or PIL over-allocating memory.

➡ Profile with memory_profiler; switch to lighter libraries or avoid unnecessary copies.

4. Improper multithreading/multiprocessing — spawning too many workers increasing memory load.

➡ Limit worker processes/threads; ensure shared memory is used efficiently.

5. Memory limits too low in Kubernetes YAML — not enough headroom for peak usage.

➡ Increase 'resources.limits.memory' in deployment spec based on observed usage.

6. Unbounded caching — in-memory caches (e.g., dict, LRU cache) growing without eviction.

➡ Add cache eviction policies or limit cache size.

7. Continuous traffic spikes — sustained load causing gradual memory buildup.

➡ Implement autoscaling; optimize request handling to reduce memory per request.

8. Native extensions in Python (C/C++ libs) — memory not tracked by Python GC.

➡ Monitor RSS memory at OS level; update or patch problematic libraries.

#devopsinterviewquestions #interviewtips #troubleshooting

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: