ESWEEK 2021 Education - Spiking Neural Networks

Автор: Embedded Systems Week (ESWEEK)

Загружено: 2021-11-03

Просмотров: 26050

ESWEEK 2021 - Education Class C1, Sunday, October 10, 2021

Instructor: Priyadarshini Panda, Yale

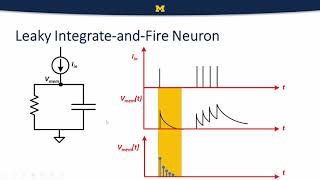

Abstract: Spiking Neural Networks (SNNs) have recently emerged as an alternative to deep learning due to their huge energy efficiency benefits on neuromorphic hardware. In this presentation, we suggest important techniques for training SNNs which bring a huge benefit in terms of latency, accuracy, interpretability, and robustness. We will first delve into how training is performed in SNNs. Training SNNs with surrogate gradients presents computational benefits due to short latency and is also considered as a more bio-plausible approach. However, due to the non-differentiable nature of spiking neurons, the training becomes problematic and surrogate methods have thus been limited to shallow networks compared to the conversion method. To address this training issue with surrogate gradients, we will also go over a recently proposed method Batch Normalization Through Time (BNTT) that allows us to target interesting beyond traditional image classification applications like video segmentation. with SNNs. Another critical limitation of SNNs is the lack of interpretability. While a considerable amount of attention has been given to optimizing SNNs, the development of explainability still is at its infancy. I will talk about our recent work on a bio-plausible visualization tool for SNNs, called Spike Activation Map (SAM) compatible with BNTT training. The proposed SAM highlights spikes having short inter-spike interval, containing discriminative information for classification. Finally, with proposed BNTT and SAM, I will highlight the robustness aspect of SNNs with respect to adversarial attacks. In the end, I will talk about interesting prospects of SNNs for non-conventional learning scenarios such as, federated and distributed learning.

Bio: Priya Panda is an assistant professor in the electrical engineering department at Yale University, USA. She received her B.E. and Master’s degree from BITS, Pilani, India in 2013 and her PhD from Purdue University, USA in 2019. During her PhD, she interned in Intel Labs where she developed large scale spiking neural network algorithms for benchmarking the Loihi chip. She is the recipient of the 2019 Amazon Research Award. Her research interests include- neuromorphic computing, deep learning and algorithm-hardware co-design for robust and energy efficient machine intelligence.

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: