Mastering Gradient Descent: Batch, Stochastic & Mini-Batch Explained with Python

Автор: Cloudvala

Загружено: 2025-02-20

Просмотров: 60

Gradient Descent is a key optimization algorithm in Machine Learning & Deep Learning. In this video, we explain:

✅ Batch Gradient Descent (BGD) – Uses the full dataset for updates

✅ Stochastic Gradient Descent (SGD) – Updates after each sample (faster but noisy)

✅ Mini-Batch Gradient Descent (MBGD) – Best balance for speed & accuracy

💡 What You’ll Learn:

✔️ How Gradient Descent works in ML models

✔️ Differences between Batch, Stochastic & Mini-Batch GD

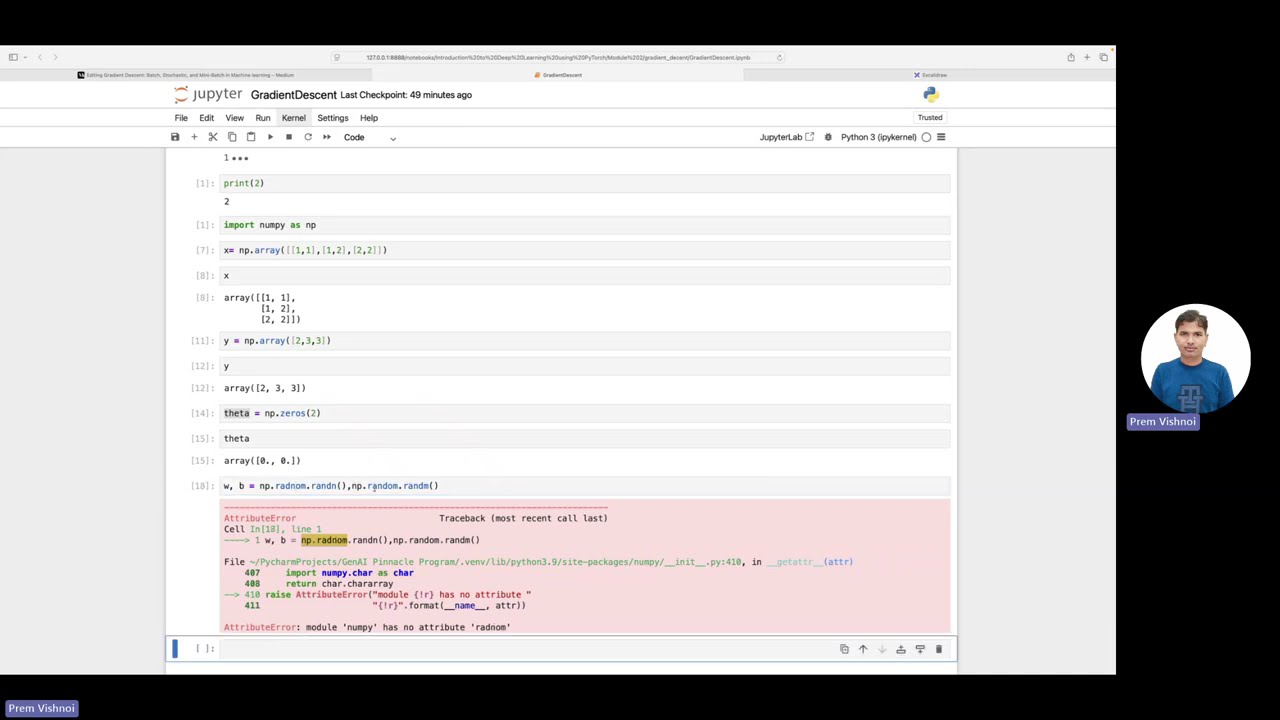

✔️ Hands-on Python implementation of all three methods 🐍

🎯 Subscribe for more AI & ML tutorials!

/ 1919f8ec31db

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: