Uncovering the Computational Roles of Nonlinearity in Sequence Modeling - TMLR 2026

Автор: Manuel

Загружено: 2026-01-09

Просмотров: 14

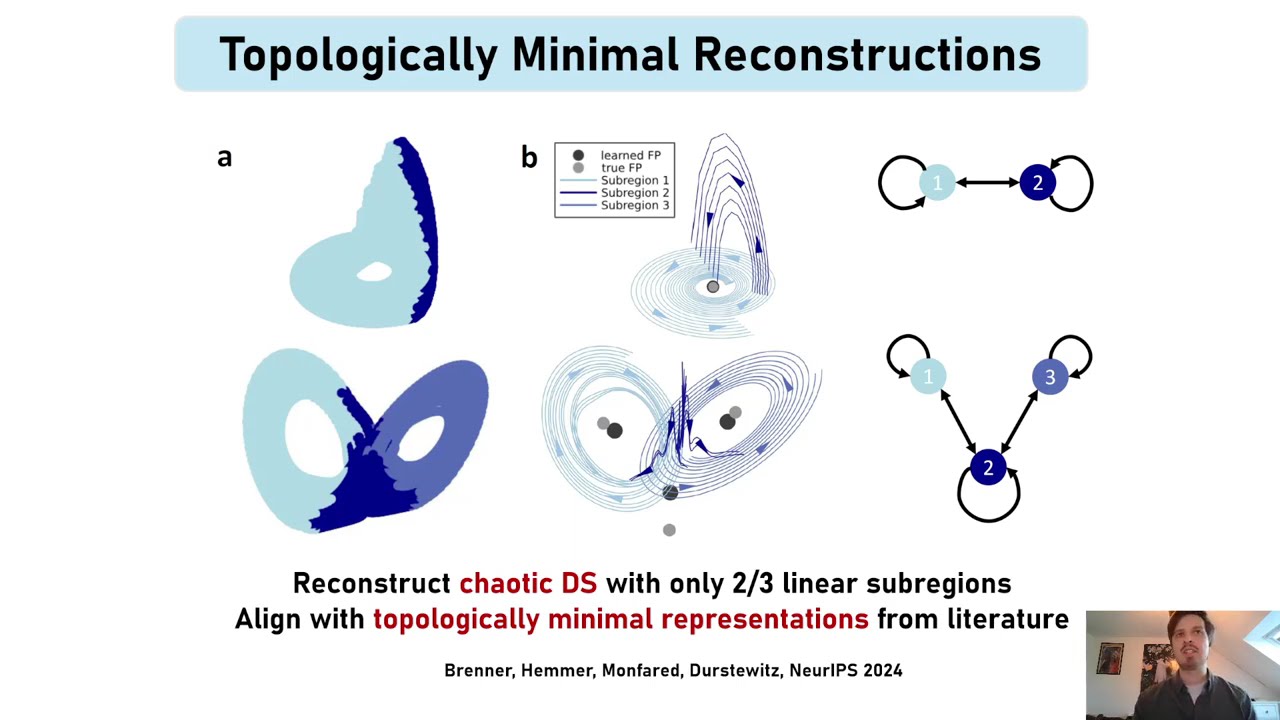

Sequence modeling tasks across domains such as natural language processing, time-series forecasting, speech recognition, and control require learning complex mappings from input to output sequences. In recurrent networks, nonlinear recurrence is theoretically required to universally approximate such sequence-to-sequence functions; yet in practice, linear recurrent models have often proven surprisingly effective. This raises the question of when nonlinearity is truly required. In this study, we present a framework to systematically dissect the functional role of nonlinearity in recurrent networks— allowing to identify both when it is computationally necessary, and what mechanisms it enables. We address the question using Almost Linear Recurrent Neural Networks (AL-RNNs), which allow the recurrence nonlinearity to be gradually attenuated and decompose network dynamics into analyzable linear regimes, making the underlying computational mechanisms explicit.

We illustrate the framework across a diverse set of synthetic and real-world tasks, including classic sequence modeling benchmarks, an empirical neuroscientific stimulus-selection task, and a multi-task suite. We demonstrate how the AL-RNN's piecewise linear structure enables direct identification of computational primitives such as gating, rule-based integration, and memory-dependent transients, revealing that these operations emerge within predominantly linear dynamical backbones. Across tasks, sparse nonlinearity plays several functional roles: it improves interpretability by reducing and localizing nonlinear computations, promotes shared (rather than highly distributed) representations in multi-task settings, and reduces computational cost by limiting nonlinear operations. Moreover, sparse nonlinearity acts as a useful inductive bias: in low-data regimes, or when tasks require discrete switching between linear regimes, sparsely nonlinear models often match or exceed the performance of fully nonlinear architectures. Our findings provide a principled approach for identifying where nonlinearity is functionally necessary in sequence models, guiding the design of recurrent architectures that balance performance, efficiency, and mechanistic interpretability.

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: