Random Forests Explained: How This Classic Machine Learning Algorithm Works

Автор: Bright Science

Загружено: 2026-01-15

Просмотров: 0

This video explains the landmark 2001 paper “Random Forests” by Leo Breiman, one of the most influential machine learning algorithms used for classification and regression.

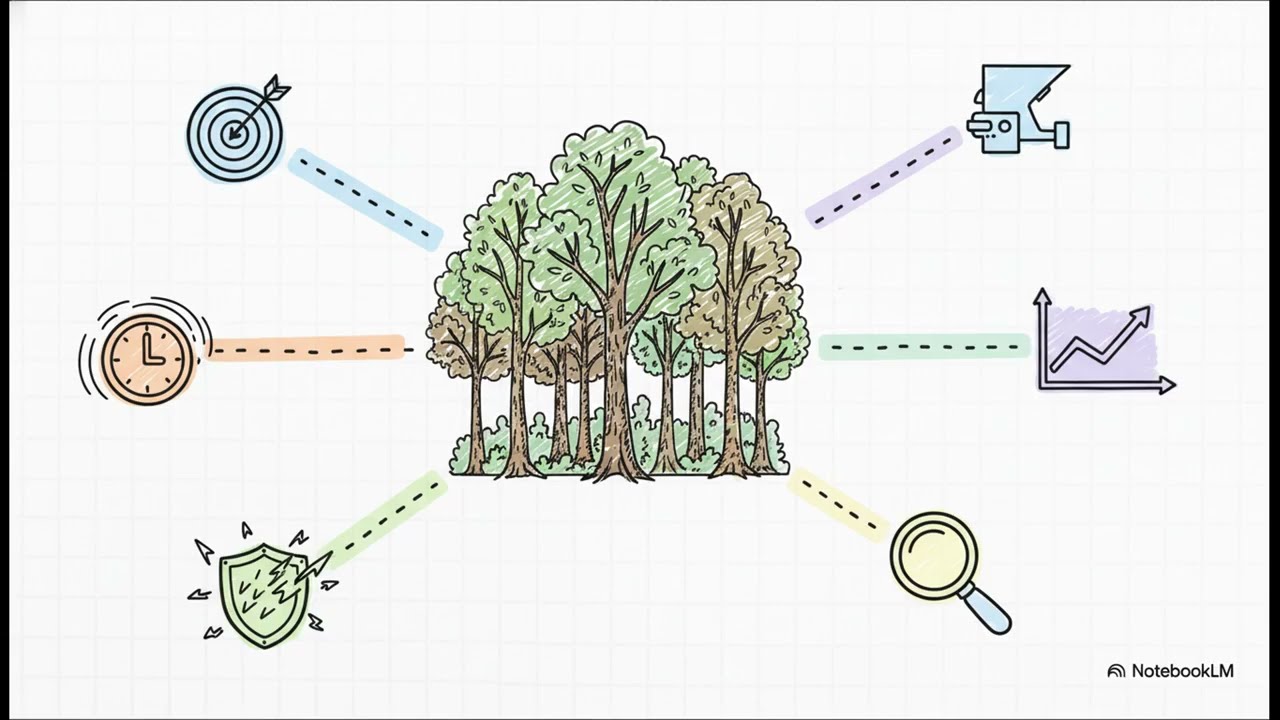

Random Forests is an ensemble learning method that combines multiple decision trees to improve predictive accuracy, robustness, and generalization performance.

🔍 In this video, you will learn:

What Random Forests is and why it was a breakthrough in machine learning

How combining many decision trees reduces overfitting

Why low correlation between trees improves model performance

The role of random feature selection at each split

How Random Forests outperform methods like AdaBoost in noisy data

What out-of-bag error estimation is and why it matters

How variable importance is calculated in Random Forests

📊 Key contributions of the article:

Introduction of a scalable and robust ensemble method

Internal error estimation without a separate validation set

Strong performance on large, complex, and mixed-type datasets

A theoretical foundation for ensemble learning

📚 Reference:

Breiman, L. (2001). Random Forests. Machine Learning, 45, 5–32.

https://doi.org/10.1023/A:1010933404324

This video is ideal for:

Data scientists and machine learning practitioners

Students learning predictive modeling

Researchers working with complex datasets

Anyone seeking a clear explanation of a foundational ML algorithm

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке:

![Суть линейной алгебры: #14. Собственные векторы и собственные значения [3Blue1Brown]](https://image.4k-video.ru/id-video/khMBBxLJLcw)