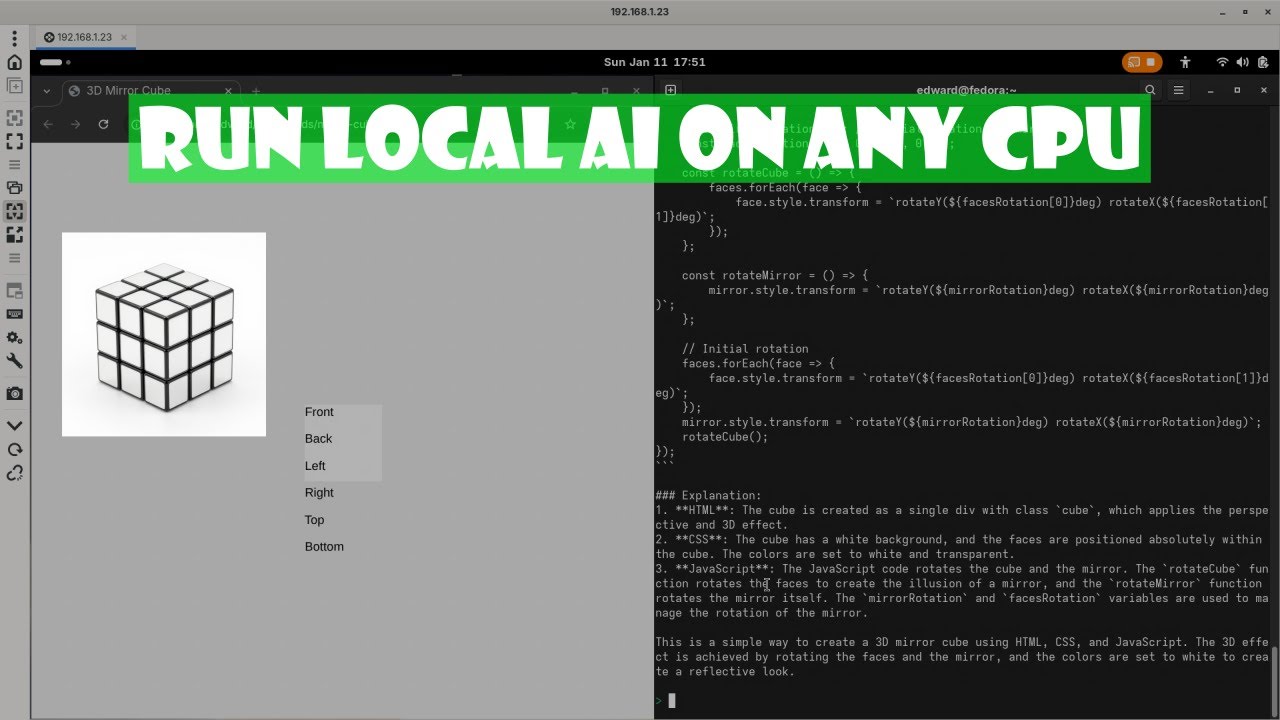

Run Local AI on Any CPU Laptop with Ollama and Qwen2.5-Coder

Автор: ojamboshop

Загружено: 2026-01-14

Просмотров: 46

Learn how to run powerful artificial intelligence locally on your Fedora Linux laptop using only your CPU. This screencast demonstrates the step by step process of installing Ollama and running the Qwen2.5-Coder model through a remote desktop connection. You will see how simple it is to set up a private coding assistant without needing an expensive graphics card. This guide is perfect for beginners who want to explore local AI and improve their programming workflow today.

Read the full blog article here: https://ojambo.com/how-to-run-qwen2-5...

Take Your Skills Further

Books: https://www.amazon.com/stores/Edward-...

Online Courses: https://ojamboshop.com/product-catego...

One-on-One Tutorials: https://ojambo.com/contact

Consultation Services: https://ojamboservices.com/contact

#Ollama #Fedora #Qwen25 #LocalAI #Linux #Programming #CPUAIDebugging

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: