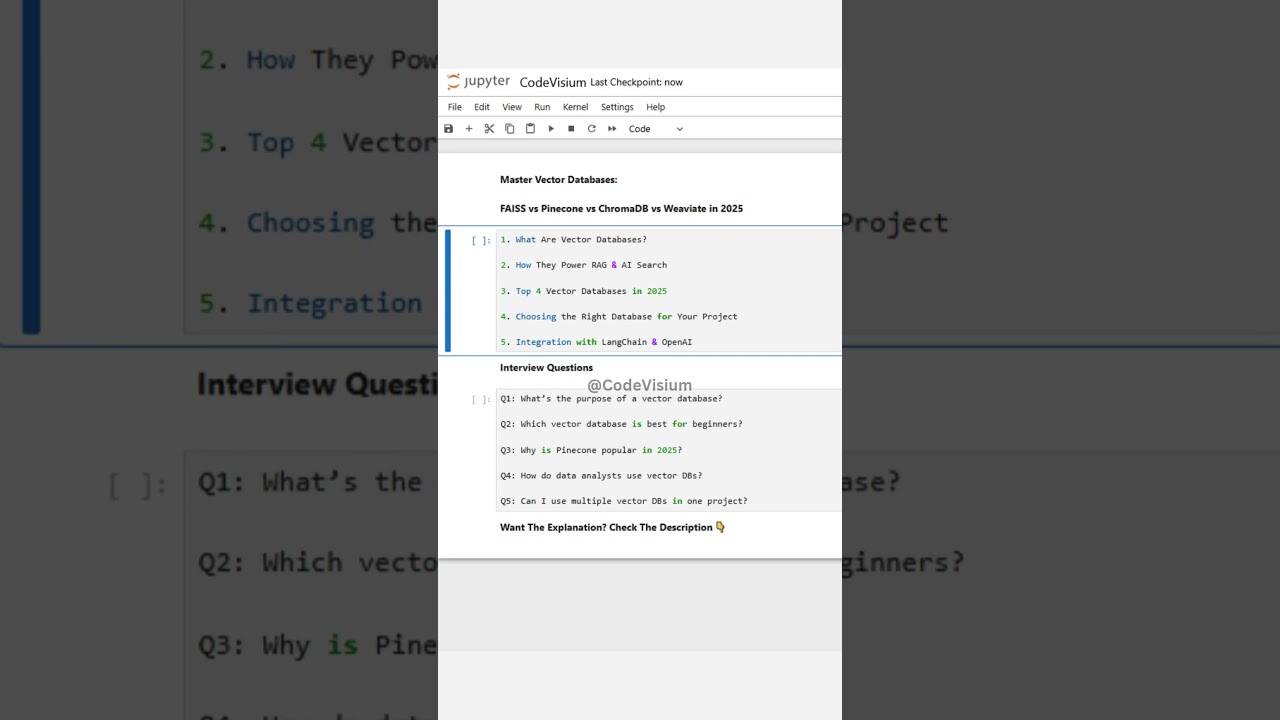

Master Vector Databases: FAISS vs Pinecone vs ChromaDB vs Weaviate in 2025

Автор: CodeVisium

Загружено: 2025-10-06

Просмотров: 1232

Vector databases store embeddings — numerical representations of text, images, or data — that let AI find similar content by meaning, not just keywords.

In 2025, vector DBs are the foundation of semantic search, recommendation systems, RAG pipelines, and AI chatbots.

Let’s understand how each works and where to use them 👇

🔹 1. What Are Vector Databases?

Vector databases index high-dimensional vectors (from models like OpenAI’s text-embedding-3-large).

Instead of matching exact words, they match concepts — “revenue growth” ≈ “sales increase.”

👉 Used for: Search engines, chatbots, recommendation engines, document intelligence.

🔹 2. How They Power RAG & AI Search

In Retrieval-Augmented Generation (RAG), vector DBs:

Store document embeddings

Retrieve relevant chunks when a query is asked

Pass them to OpenAI GPT for context-aware answers

👉 Result: Smart, factual, and context-grounded outputs.

🔹 3. Top 4 Vector Databases in 2025

🧠 FAISS (Facebook AI Similarity Search)

Offline, open-source, super-fast for local use

Best for prototyping or personal AI projects

from langchain_community.vectorstores import FAISS

db = FAISS.from_texts(texts, embeddings)

🌲 Pinecone

Cloud-hosted, scalable, easy to integrate with LangChain

Used in production-grade RAG systems

from langchain_pinecone import Pinecone

db = Pinecone(index_name="my-index", embedding=embeddings)

🧩 ChromaDB

Open-source and lightweight, perfect for local apps

Integrates seamlessly with LangChain and LlamaIndex

from langchain_community.vectorstores import Chroma

db = Chroma.from_texts(texts, embeddings)

🌐 Weaviate

Cloud or hybrid database with advanced metadata search

Supports multi-modal data (text, image, audio)

import weaviate

client = weaviate.Client("https://your-instance.weaviate.network")

🔹 4. Choosing the Right Database for Your Project

Use Case Best Option

Local Prototyping FAISS or ChromaDB

Cloud Scale & Enterprise Pinecone

Multi-Modal or Hybrid Weaviate

Cost-Effective Open Source ChromaDB

🔹 5. Integration with LangChain & OpenAI

LangChain supports all these databases natively. You can build pipelines like:

📂 Data → 🧠 Embeddings → 🗃️ Vector DB → 🤖 OpenAI GPT → 📊 Insight

This allows analysts to query PDFs, SQL, or CSVs conversationally.

❓ 5 Questions & Answers

Q1: What’s the purpose of a vector database?

👉 To store and search embedding vectors for semantic similarity instead of keyword matching.

Q2: Which vector database is best for beginners?

👉 FAISS and ChromaDB — they’re free, simple, and great for experimentation.

Q3: Why is Pinecone popular in 2025?

👉 It offers scalability, cloud hosting, and fast retrieval — perfect for production RAG systems.

Q4: How do data analysts use vector DBs?

👉 To build internal knowledge assistants that can search reports, FAQs, or dashboards via natural language.

Q5: Can I use multiple vector DBs in one project?

👉 Yes, LangChain supports hybrid systems — for instance, FAISS for fast local cache + Pinecone for cloud data.

📌 Pro Tip:

For large data projects, use chunking + metadata filters to improve retrieval accuracy. Combine LangChain retrievers + vector DBs with OpenAI for building smart search and analytics systems.

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: