R Tutorial: PCA and t-SNE

Автор: DataCamp

Загружено: 2020-03-30

Просмотров: 6893

Want to learn more? Take the full course at https://learn.datacamp.com/courses/ad... at your own pace. More than a video, you'll learn hands-on coding & quickly apply skills to your daily work.

---

In the previous lesson, we discussed distance metrics and their application to find similar objects in a feature space. It's clear that the task of finding similar digits based on their pixel features is a complex task and can't be easily solved using distance metrics.

One of the problems of distance metrics is their inability to deal with high-dimensional datasets, which is known as the curse of dimensionality.

In this lesson, we will explain the curse of dimensionality concept and then focus on how the problem of finding similar digits can be solved using dimensionality reduction techniques such as PCA and t-SNE, which usually provides better results than PCA.

One of the issues of finding similar objects in high dimensional spaces that does not occur in low dimensional ones (for example in three dimensions), is the curse of dimensionality.

The term was coined by Richard Bellman. It describes the phenomena that arise when the number of dimensions grows, making the volume of the space increase so fast, requiring an exponential amount of data to preserve the previous distance in lower dimensions.

For instance, in this example, you can see that we can capture 37.5% of the points in one dimension for a distance of 0.4. If we keep the same distance we will only cover 10% of the points in two dimensions. And the same effect happens in higher dimensions.

One way to avoid this effect is using dimensionality reduction techniques.

PCA is one of the most classic and well-known dimensionality reduction techniques. As you know, it has been covered in a previous dimensionality reduction course at DataCamp.

PCA is a linear feature extraction technique. It creates new independent features by maximizing the variance of the data in the new low dimensional space.

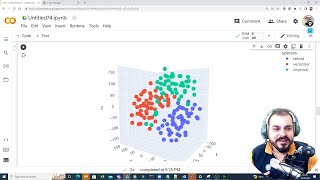

PCA finds the principal components that capture the maximal variance in the dataset. In this example, if you have a three-dimensional dataset, we can capture a two-dimensional embedding using the first two principal components.

In R we can use the function prcomp() from the stats package to compute the PCA principal components.

Here we are computing the principal components using the default parameters and removing the first column of the dataset which corresponds to the digit label.

We can also get only the first two principal components by setting the rank parameter. The proportion of variance captured by those principal components can be shown using the summary() function.

In this picture, we are plotting the two principal components of the MNIST dataset on the x and y axes. Each digit is colored according to its label.

As you can see, PCA has some trouble separating the digits. This is because PCA can only capture the linear structure in the input space.

On the other hand, here you can find the output of the full MNIST dataset using t-SNE. The algorithm generates a two-dimensional embedding from the original 784 dimensions of each digit. This embedding provides a much better representation than the PCA algorithm.

Let's practice and look at the differences between PCA and t-SNE output.

#R #RTutorial #DataCamp #Advanced #Dimensionality #Reduction #PCA #tSNE

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: