The Recursive Language Model Revolution: Scaling Context by 100x

Автор: SciPulse

Загружено: 2026-01-14

Просмотров: 40

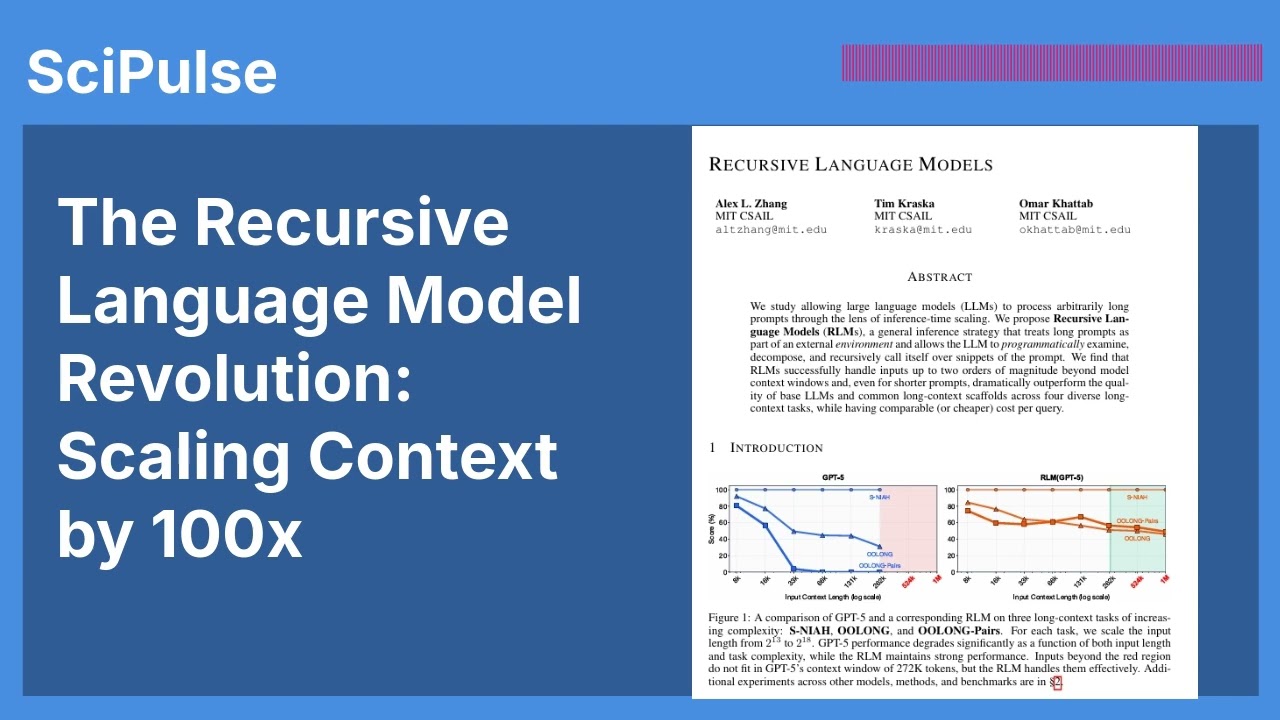

In this episode of SciPulse, we dive into a revolutionary shift in how Artificial Intelligence processes information: Recursive Language Models (RLMs). As Large Language Models (LLMs) tackle more complex, long-horizon tasks, they encounter a critical hurdle known as "context rot"—the inevitable decline in performance as prompts become increasingly longer.

Joining the frontier of inference-time scaling, researchers from MIT CSAIL have proposed a way to allow models like GPT-5 and Qwen3-Coder to process prompts that are two orders of magnitude larger than their physical limits.

In this episode, we discuss:

The Symbolic Shift: Why feeding long prompts directly into a neural network is failing, and why treating text as an external environment is the solution.

The REPL Advantage: How RLMs use a Python REPL environment to programmatically "peek" into, decompose, and recursively analyze massive datasets.

10 Million Tokens and Beyond: A look at how RLMs successfully navigate 10M+ token prompts in benchmarks like BrowseComp-Plus and OOLONG, significantly outperforming traditional retrieval and summarisation methods.

Efficiency vs. Complexity: Understanding why RLMs maintain strong performance on information-dense tasks where the answer depends on nearly every line of the prompt.

The Future of Deep Research: How "symbolic interaction" within a persistent programming environment could be the next axis of scale for AI.

Whether you are a machine learning researcher or just curious about the next step in AI evolution, this episode explains how we are moving from reasoning purely in "token space" to reasoning through structured, recursive execution.

Educational Disclaimer: This podcast provides an educational summary and discussion of the paper "Recursive Language Models." It is not a replacement for the original research, which contains the full technical methodology and experimental data.

YouTube Video: • Recursive Language Models: Scaling AI Cont...

Explore the Research:https://arxiv.org/pdf/2512.24601

#AI #MachineLearning #LLM #RecursiveLanguageModels #ComputerScience #DeepLearning #ContextScaling #GPT5 #MITCSAIL #NLP #Research #TechPodcast #SciPulse #PythonREPL #ArtificialIntelligence

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: