Attention Is All You Need: Seq2Seq Models & The Mechanism That Built GPT & BERT

Автор: AI Academy

Загружено: 2025-11-07

Просмотров: 169

Attention Is All You Need: Seq2Seq Models & The Mechanism That Built GPT & BERT

Short Description

This is the fundamental architecture powering Google Translate, ChatGPT, and all modern LLMs! We dive into the world of Sequence-to-Sequence (Seq2Seq) models and solve their biggest flaw: the Context Vector Bottleneck.

You will learn:

The Encoder-Decoder architecture and why it struggles with long inputs.

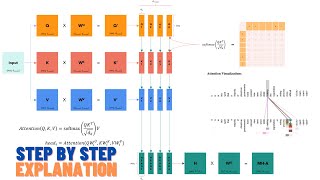

How the revolutionary Attention Mechanism allows the decoder to dynamically "pay attention" to relevant input parts.

The exact mechanics of attention: scoring, softmax, and weighted sum.

The simple question ("What if we only use attention?") that led to the Transformer architecture (BERT, GPT, etc.).

Practical PyTorch code examples for implementation.

Master this concept to truly understand the deep learning revolution!

Hashtags

#AttentionMechanism #Seq2Seq #Transformers #NLP #DeepLearning #PyTorch #MachineTranslation #LLM #AITutorial

#AttentionMechanism #Seq2Seq #Transformers #NLP #DeepLearning #PyTorch #MachineTranslation #LLM #AITutorial

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: