ReLU Activation Function Explained | The Secret Behind Deep Learning

Автор: MySirG

Загружено: 2026-01-07

Просмотров: 193

Day-95 | #100dayslearningchallenge

Deep Learning models fast aur powerful kaise hote hain?

Iska ek bada reason hai — ReLU (Rectified Linear Unit) activation function.

Is video mein hum ReLU activation function ko basic maths, intuition, graphs aur real AI context ke saath step-by-step samjhenge. Agar aap Neural Networks seekh rahe ho, to ye concept miss karna impossible hai.

📌 In This Video You’ll Learn:

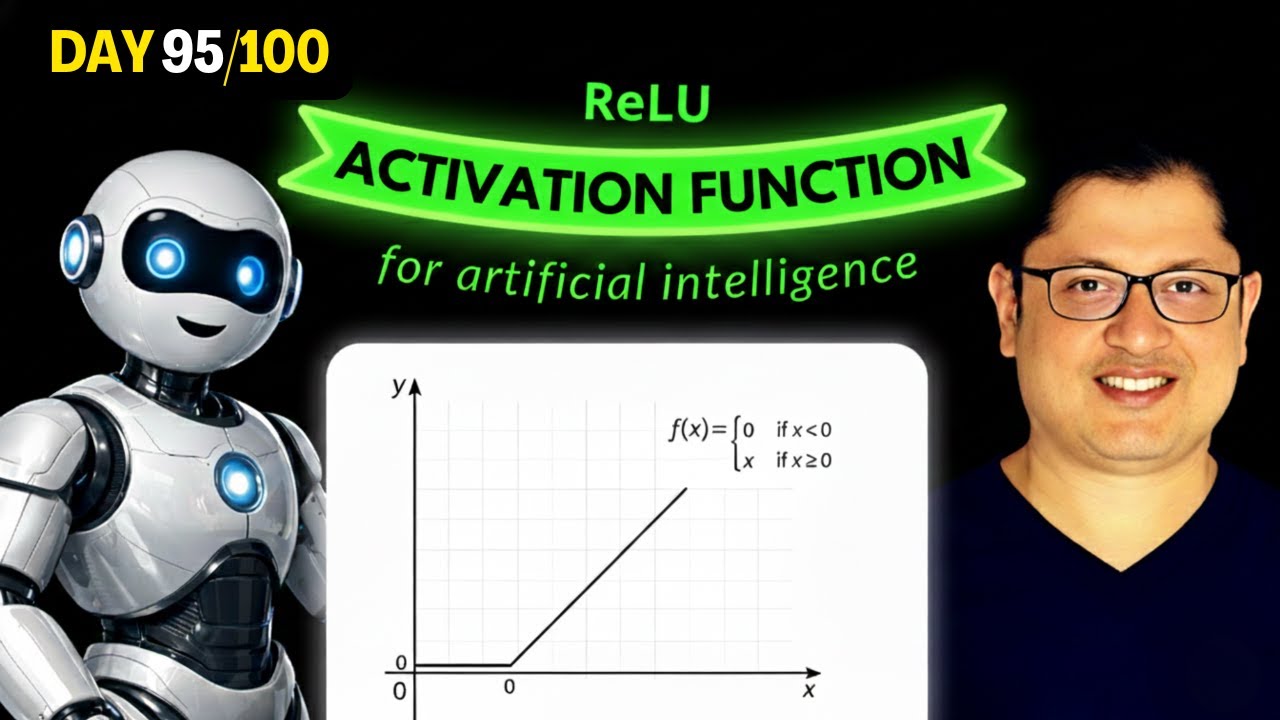

✔ ReLU activation function kya hota hai

✔ Mathematical definition & intuition

✔ ReLU ka graph kaise behave karta hai

✔ Sigmoid se better kyun maana jata hai

✔ Vanishing gradient problem ka solution

✔ Dying ReLU problem & its fix (Leaky ReLU)

✔ Hidden layers mein ReLU ka role

👉 Join the #100DaysLearningChallenge

👉 Subscribe to MySirG for daily lessons on coding, projects, and core programming concepts!

________________________________

DSA for FAANG Batch Details: https://premium.mysirg.com/learn/batc...

Offer is for limited time

_______________________________

🎯 Watch more videos in this series → • 100 Days Learning Challenge

🎯 Basic Maths for AI and Data Science: • Basic Maths for AI and Data Science

UCF Batch: https://premium.mysirg.com/learn/batc...

My other channel: / @mysirgdotcom

🎯 Learn once, use forever!

#MySQL #Database #SQL #MySirG #100DaysLearningChallenge #LearnCoding #python #cmd #sorting #faang #google #facebook #amazon #apple #netflix #meta #jobs #dsa #bogosort #datastructures

#algorithms #mysirg

Website

https://premium.mysirg.com

Instagram

/ mysirg

Old Channel

/ @mysirgdotcom

LinkedIn

/ saurabh-shukla-5b73bb6

X

https://x.com/sshukla_manit

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке:

![Как происходит модернизация остаточных соединений [mHC]](https://image.4k-video.ru/id-video/jYn_1PpRzxI)