The Power of Ensemble Learning: How to Use Stacking for Better Machine Learning Models

Автор: Super Data Science

Загружено: 2025-02-24

Просмотров: 3094

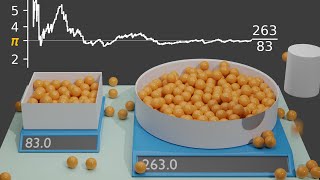

How do you get the best out of multiple machine learning models? By using stacking! In this tutorial, we explore the power of ensemble learning and why stacking is the ultimate technique for improving machine learning predictions. You'll learn how to combine models like Linear Regression, XGBoost, and Neural Networks to create an even stronger meta model.

Course Link HERE: https://sds.courses/ml-2

You can also find us here:

Website: https://www.superdatascience.com/

Facebook: / superdatascience

Twitter: / superdatasci

LinkedIn: / superdatascience

Contact us at: [email protected]

📌 Chapters

00:00 - Introduction to Ensemble Learning and Stacking

00:30 - Why Use Multiple Models Instead of One?

01:03 - The Basics of Model Averaging and Weighting

01:36 - What is Stacking in Machine Learning?

02:05 - Stacking in Regression vs. Classification Problems

02:38 - How K-Fold Cross Validation Works in Stacking

03:08 - Training Base Models Using Cross Validation

04:12 - Generating Predictions for the Meta Model

05:16 - How to Train the Meta Model Using Base Model Outputs

05:51 - Applying the Stacked Model to Test Data

06:24 - Making Predictions with the Meta Model

06:56 - Final Thoughts and Recap on Stacking

07:29 - Next Steps and Where to Learn More

💡 Subscribe for more machine learning tutorials!

🔔 Turn on notifications so you never miss a video!

#MachineLearning #AI #DataScience #DeepLearning #Python #ArtificialIntelligence #DataAnalytics #MLModels #NeuralNetworks #EnsembleLearning #AITrends #BigData #AIResearch #Kaggle #TechTutorials #StackingML

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: