FAI TALK Jeannette Bohg: A vision for robotics in the age of foundation models

Автор: Texas Robotics

Загружено: 2025-10-08

Просмотров: 239

Jeannette Bohg [homepage]

Assistant Professor, Stanford

Title: A vision for robotics in the age of foundation models

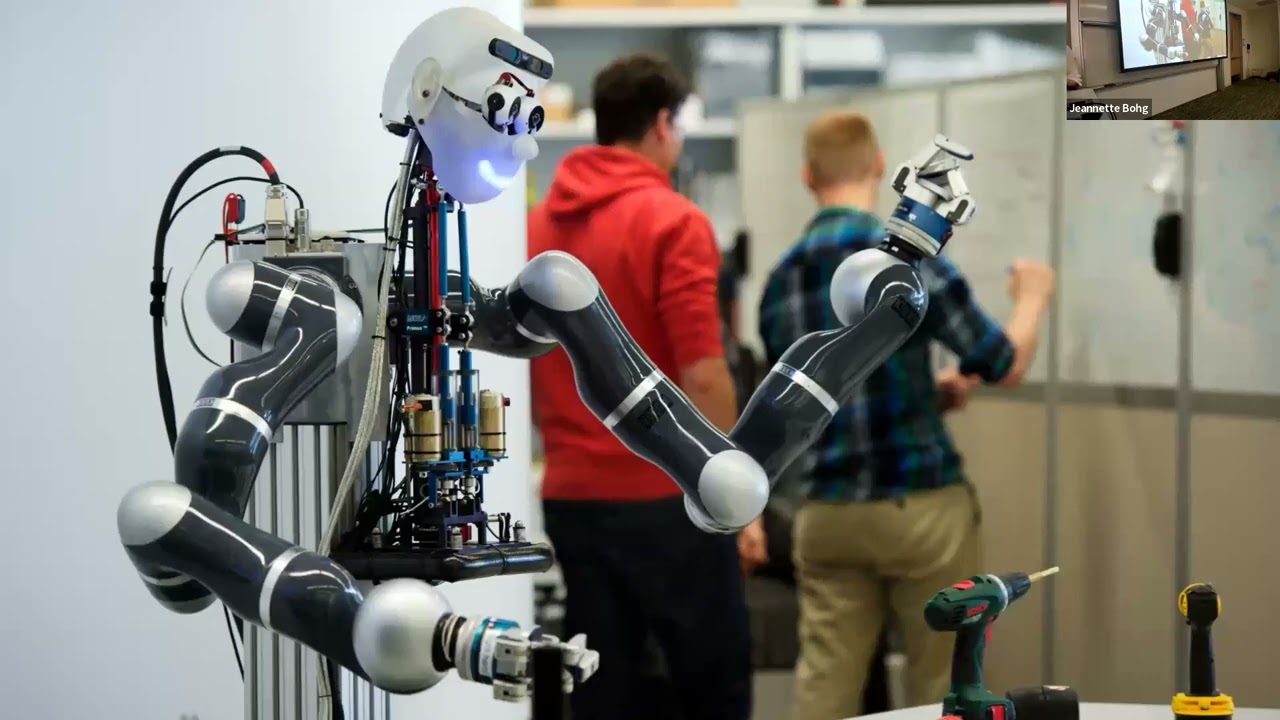

Abstract: My long-term research goal is to enable real robots to manipulate any kind of object such that they can perform many different tasks in a wide variety of application scenarios such as in our homes, in hospitals, warehouses, or factories. These tasks will require fine sensorimotor skills to for example use tools, operate devices, assemble parts, deal with deformable objects and so on. I claim that equipping robots with these sensorimotor skills is one of the biggest challenges in robotics. The currently dominant approach towards achieving this goal of universal sensorimotor skills is using imitation learning and collecting as much robot data as humanly possible. The promise of this approach is a foundation model for robotics. While I believe in the power of data and simple learning models, I think we need to think beyond this for achieving the goal of a generalist robot. In this talk, I will discuss the need for (1) better robot policy architectures, (2) better multi-sensory data, (3) better online and life-long learning algorithms and (3) better robot hardware.

About the speaker: Jeannette Bohg is an Assistant Professor of Computer Science at Stanford University. She was a group leader at the Autonomous Motion Department (AMD) of the MPI for Intelligent Systems until September 2017. Before joining AMD in January 2012, Jeannette Bohg was a PhD student at the Division of Robotics, Perception and Learning (RPL) at KTH in Stockholm. In her thesis, she proposed novel methods towards multi-modal scene understanding for robotic grasping. She also studied at Chalmers in Gothenburg and at the Technical University in Dresden where she received her Master in Art and Technology and her Diploma in Computer Science, respectively. Her research focuses on perception and learning for autonomous robotic manipulation and grasping. She is specifically interested in developing methods that are goal-directed, real-time and multi-modal such that they can provide meaningful feedback for execution and learning. Jeannette Bohg has received several Early Career and Best Paper awards, most notably the 2019 IEEE Robotics and Automation Society Early Career Award and the 2020 Robotics: Science and Systems Early Career Award.

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: