RobotLearning: Scaling Offline Reinforcement Learning

Автор: Montreal Robotics

Загружено: 2025-03-20

Просмотров: 363

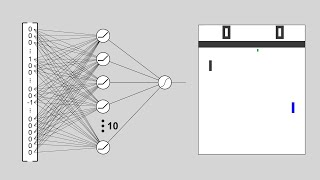

I started discussing offline reinforcement learning, highlighting its potential to learn from pre-existing datasets, a departure from online RL's data inefficiency and divergence issues. I emphasized the goal of training a policy from offline data without divergence, similar to supervised learning. We explored the concept of "stitching" trajectories, a unique advantage of RL, where optimal paths can be constructed from disparate data segments, leveraging the Markov property. However, I also pointed out that this is difficult to achieve in practice, especially with partial observations. We discussed model-based RL as a potential solution but acknowledged the challenges of error accumulation in long-horizon planning. I then introduced the Decision Transformer, a supervised learning approach using returns as input to generate trajectories, aiming to minimize error across the entire sequence. However, I noted its limitations in stitching and handling stochasticity.

Then I discuss recent papers on adapting offlineRL methods to large transformers, how to include offline data to help improve early training performance, and how to perform offline to line RL without needing to keep around the old offline RL dataset, which is typically required.

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке: