Local RAG PDF Chatbot with LangChain, FAISS & Ollama | PDF Question Answering Demo

Автор: Neural Ascent

Загружено: 2026-01-01

Просмотров: 69

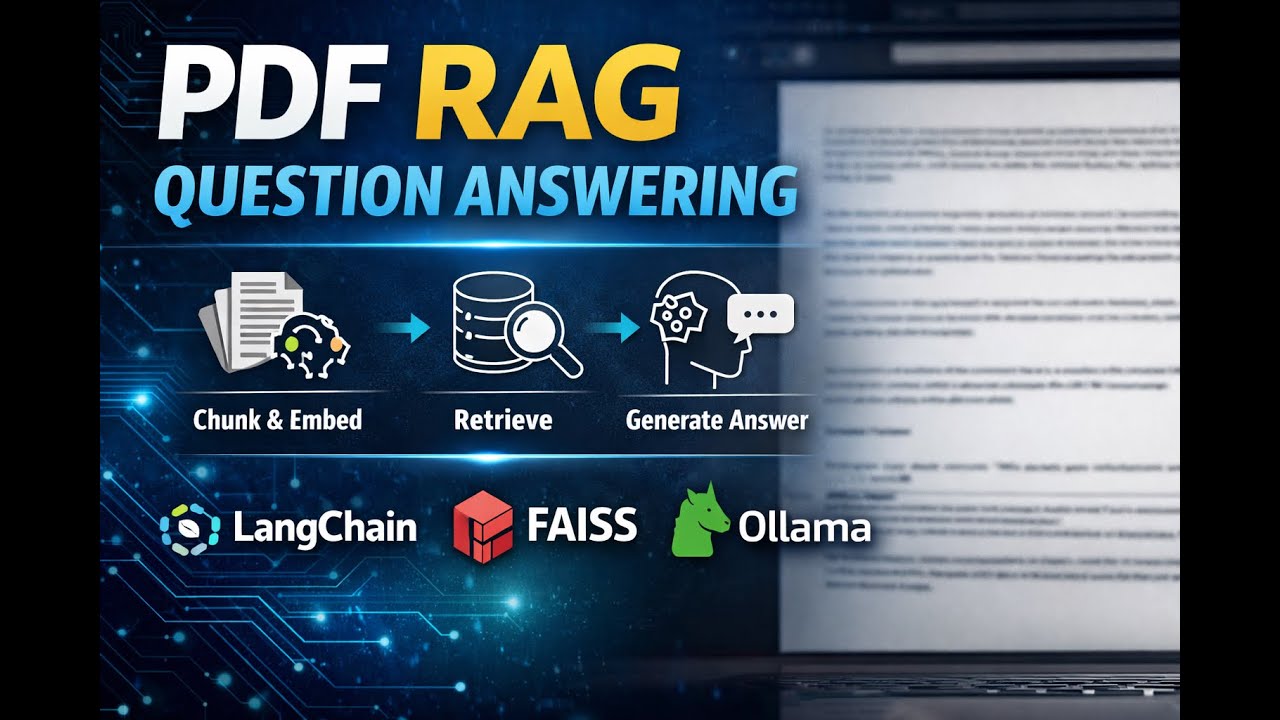

In this video, I demonstrate a PDF‑based Question Answering system built using a Retrieval‑Augmented Generation (RAG) pipeline.

The app lets you upload any PDF and ask questions whose answers are generated only from the document content, powered by a local LLM running through Ollama.

🔗 GitHub Repository

https://github.com/Aakash109-hub/loca...

🧠 What this RAG app does

Upload a PDF and chat with it using natural language

Retrieves the most relevant chunks from the document using vector search

Generates grounded answers from a local LLM instead of calling cloud APIs

🛠 Tech stack used

PyPDFLoader for loading PDF documents

RecursiveCharacterTextSplitter for smart text chunking

HuggingFace sentence‑transformer embeddings for vector representations

- FAISS as the vector database for fast similarity search

- LangChain to orchestrate the RAG pipeline (retrieval + generation)

- Ollama for local LLM‑based answer generation

- Streamlit for the web user interface

🎯 Who this video is for

Developers and students exploring RAG and PDF Question Answering

Anyone interested in running local LLMs for privacy‑friendly document QA

- Recruiters reviewing my AI/ML projects and practical GenAI skills

Доступные форматы для скачивания:

Скачать видео mp4

-

Информация по загрузке:

![Как общаться с PDF-файлами, используя локальные большие языковые модели [Ollama RAG]](https://image.4k-video.ru/id-video/ztBJqzBU5kc)